Implementation Research: Context and Influence

In the last couple of posts, I have written about implementation research as discussed in the online article posted on BJM, Implementation research: what it is and how to do it. "Implementation research aims to cover a wide set of research questions, implementation outcome variables, factors affecting implementation, and implementation strategies.”[1]

So far, I’ve introduced the ‘explore’ and ‘describe’ objectives. During the ‘explore’ phase, researchers develop a hypothesis about the outcome of a program intervention. The ‘describe’ objective builds upon the hypothesis, seeking to understand the context in which implementation occurs. And, it seeks to describe the main factors influencing the program outcome within that context.

In the ‘influence’ phase, researchers test the hypothesis. Did the program intervention result in the expected outcome? Is the desired program outcome observable? If yes, is the program responsible for that outcome? For energy programs, we often term influence as attribution, and link attribution to the dollars spent. Can we attribute the program dollars employed to the results we are observing?

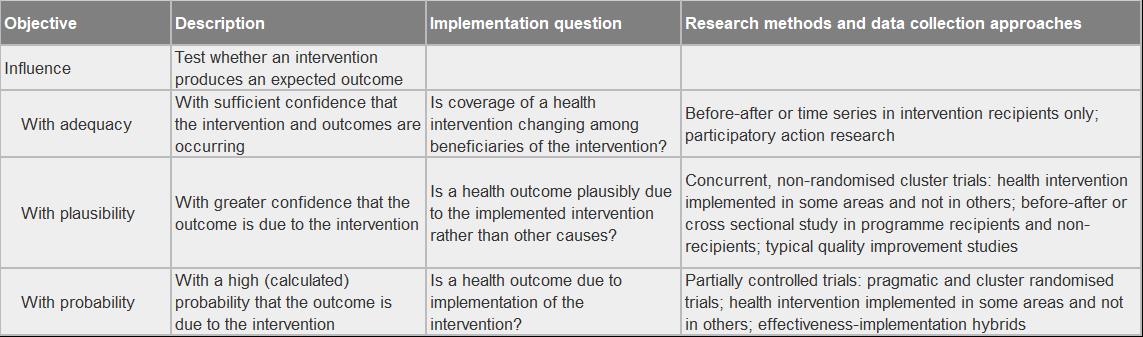

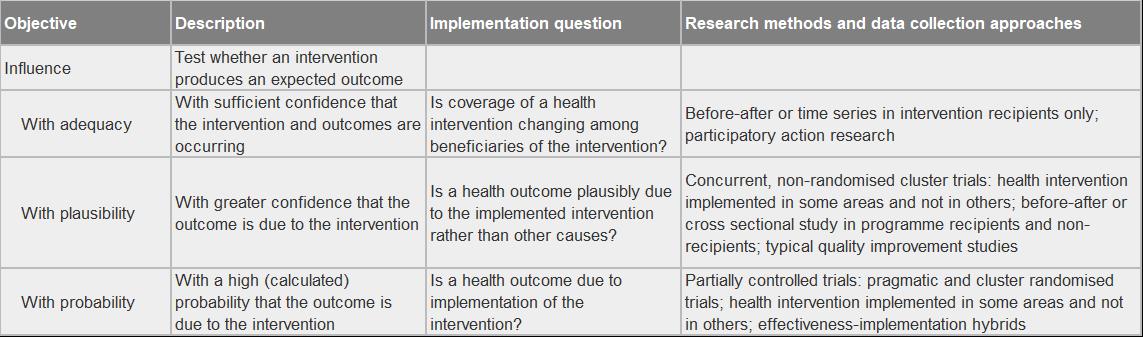

The table below summarizes the influence objective for implementation research.[2]

So… how does implementation research intersect with embedded research and evaluation? It is about involvement. Involvement of program managers, policy makers, and other stakeholders in the implementation research design and conduct, and in the results interpretation and recommendations development. According to the online article, Strengthening health systems through embedded research, “Embedded research conducted in partnership with policy-makers and implementers, integrated in different health systems settings and that takes into account context-specific factors can ensure greater relevance in policy priority-setting and decision-making.”[3]

This involvement is critical for understanding the context of the program interventions and understanding the outcomes. It is through the collaboration of those deeply engaged in the program or policy, and the independent objectivity of the researchers, that robust vetting can occur.

In the Next Issue

In the next issue, I will consider the remaining implementation research objectives discussed in the BJM article, ‘explain’ and ‘predict’.

[1] Peters, D. H., Adam, T., Alonge, O., Agyepong, I.A., & Tran, N. (2013). Implementation research: what it is and how to do it. BMJ 2013;347:f6753. https://doi.org/10.1136/bmj.f6753

[2] Ibid, Table 2.

[3] Ghaffar, A., Langlois, E. V., Rasanathan, K., Peterson, S., Adedokun, L., Tran, N. T.. Strengthening health systems through embedded research. Bulletin of the World Health Organization 2017; 95:87. doi: http://dx.doi.org/10.2471/BLT.16.189126

About This Blog

We are on the brink of an evaluation renaissance. Smart grids, smart meters, smart buildings, and smart data are prominent themes in the industry lexicon. Smarter evaluation and research must follow. To explore this evaluation renaissance, I am looking both inside and outside the evaluation community in a search for fresh ideas, new methods, and novel twists on old methods. I am looking to others for their thoughts and experiences for advancing the evaluation and research practice.

So, please…stay tuned, engage, and always, always question. Let’s get smarter together.