Pies, Nice Dinners, and Broken Windows – Attribution, Causality, and NTG

Welcome back to the Challenges of Estimating NTG, where we explore the challenges of assigning a numeric counterfactual value to a complex decision-making process. Part 1 discussed the baseline in free-ridership questions to determine what would have happened in the absence of program intervention. As an example, without receiving an incentive for installing a high-efficiency A/C system, what level of efficiency would a participant have installed?

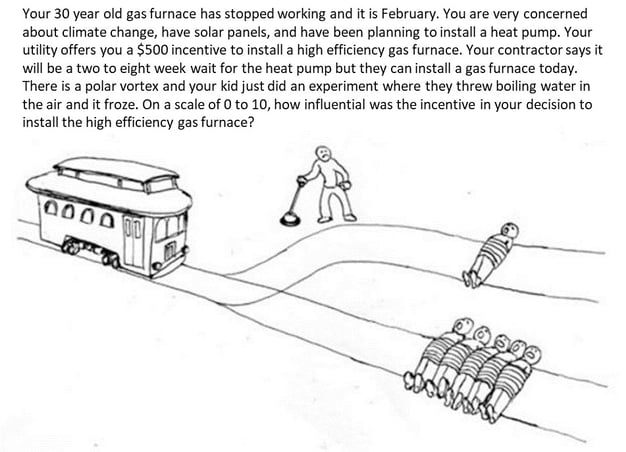

As described in Part 1, program administrators try to maximize the savings that can be attributed to the program, and net-to-gross research attempts to quantify this attribution. But is attribution what we really should be studying? Welcome to the evaluators’ trolley problem.

Dividing Up a Pie or Planning a Nice Dinner?

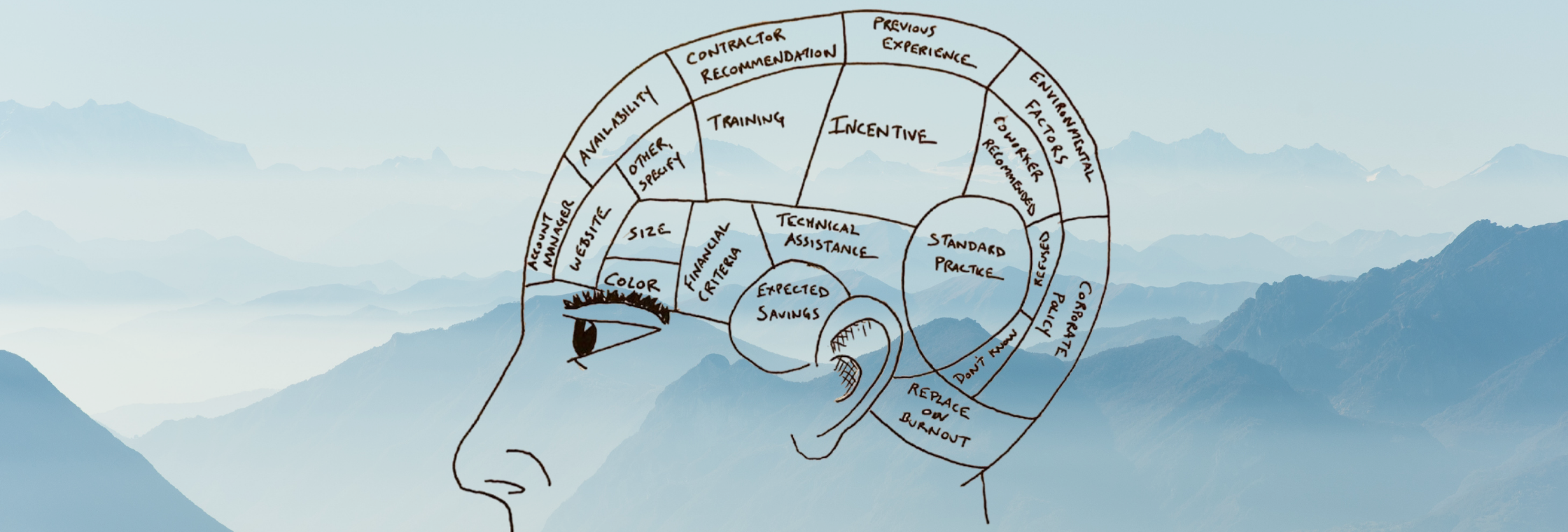

At the most basic level, the decision to install a high-efficiency widget is based on multiple factors, each with its share of the overall decision. Maybe most (75%) of someone’s decision to purchase an ENERGY STAR refrigerator over a non-ENERGY STAR model was because their utility gave them a $50 rebate but a small part of the decision (25%) was because the ENERGY STAR model was in stock and the alternative had a three-week wait or was a different color. It is easy to visualize this as a pie and the program gets a huge slice of credit and “other factors” get a smaller slice. If other factors are involved in the decision, the size of the decision pie does not change, it is just cut up into more slices of credit.

But what if this is too simplistic for most energy-related decisions? For example, when someone is considering installing solar panels on their house, many factors may be considered: a utility rebate, a state tax credit, a federal tax credit, the desire to have a smaller carbon footprint, the need to one-up the Jones, etc. Some of these factors may be conditions that are necessary but not sufficient. Perhaps we, as an industry, need to shift from the concept of attribution to the idea of contribution. Instead of thinking of the intervention of an energy efficiency program as a slice of a pie, maybe we need to think of it as one course of an amazing dinner; something that is good on its own but is infinitely better when paired with the right side dish or wine.

Causality

After pondering this for years, I was excited (in a way, I guess) when I read an interesting article about Covid-19 comorbidities and causal thinking. Although the article discussed a much more serious topic than properly attributing savings to energy efficiency programs, both areas center around the idea of the counterfactual - would an outcome still have happened in an alternate reality where a potential cause did not happen?

The article discusses a classic example of someone throwing a rock through a window. If a rock wasn’t thrown, then the window wouldn’t have broken. Therefore, it is simple and obvious that the rock must have caused the window to break.

But what if two people were throwing rocks at the window at the same time? Which rock caused the window to break? The article asks: “Suppose Veronica and Logan both threw rocks at the same window at the same time. Did Veronica’s rock cause the window to break? According to counterfactual logic, no, because if she hadn’t thrown a rock, it still would have broken due to Logan’s rock. But the same reasoning applies to Logan’s rock. Yet the window did break. Something must have caused it.”

What if the two people were throwing different-sized rocks. Would this change the causality of the window breaking or the attribution of whose fault it was? Now consider the decision-making process of purchasing an air source heat pump, with a utility program throwing a “rock” along with the rocks of a multitude of other factors, each of a different size. Does the concept of divvying up pieces of an attribution pie best fit this?

Question Design

Bringing those larger philosophical questions back down to earth, let’s focus on the design of a question in existing NTG batteries of questions. Are questions phrased in a way that measures the concept being researched? And are current NTG batteries asking about attribution or contribution? Interestingly, some ask about both.

In Illinois, New York, California, and probably other states[1], the free-ridership algorithm currently includes questions that ask about the relative importance of the program intervention. The surveys ask respondents to rate the relative importance of the program compared to other non-program factors. This means that if there was any factor other than the program that played into a participant’s decision to install the energy-efficient widget, then the program cannot claim full credit for the savings.

Additionally, in the case of Illinois and California (at least), these questions about the relative importance of the program are asked (and are included in calculations) with questions that ask about the absolute importance of the program (e.g., if the program did not exist, how likely would you…). Regardless of whether NTG questions should be asked in terms of relative or absolute importance, it is clear that they are measuring two different concepts and should not be averaged or otherwise combined.

Moving Forward

As I mentioned in Part 1, I think the difficulty of putting a numeric value on a complex decision-making process is what makes NTG research so interesting. While there is never going to be a perfect method for doing this, I think it is still important for working groups to incrementally improve NTG methodology to estimate the best value possible. As stewards of ratepayer funds, program administrators should work to ensure that their investments are meaningful.

However, with the climate crisis increasing every day, we should also consider that every contribution to the larger goal of reducing carbon emissions is crucial. Resources should be spent trying to save that additional kWh rather than fighting over who can claim it. New York has recently shifted to a gross savings framework with this understanding.

Too often, NTG research is seen as the final step of an impact evaluation that “trues up” savings to get from a theoretical maximum to the level for which the program is responsible. However, this mindset can prevent further investigation into “why?” placing less importance on falsely precise NTG values and more importance on participants’ decision-making process will allow evaluators to focus more on helping program administrators improve the design of programs. This will lead to more savings overall, even if the credit, like a nice meal, should be shared.

[1] I do not claim to have perfect knowledge of every NTG algorithm in North America. Additionally, Illinois at the very least is considering removing this question from the non-residential algorithm.

Want more evaluation content? Check out our full blog here.