The Perils of Deemed Savings

In addition to understanding energy efficiency, evaluators also search for efficiencies in cost and time. Due to schedule and budget constraints, we focus evaluation resources towards the most impactful tasks, such as studying the measures or elements of a program with the most savings and/or the most uncertainty for estimated savings.

Because we cannot measure everything all the time (nor do we have time machines), evaluations are built on assumptions. Many measures have been well researched and thus have accurate and stable parameter estimates. Utility energy efficiency commercial and industrial programs often offer these measures through a “prescriptive” program, where savings can be reliably estimated based on a limited number of questions on the rebate application form (e.g., quantity, building type, and square footage).[1]

In many jurisdictions, utilities or the utilities’ commission develop a technical reference manual (TRM) that aggregates agreed-upon values and methodologies for calculating energy savings of various measures. TRMs offer many benefits, including providing transparent, consistent, and documented assessments of energy savings that can be used by multiple parties, and reducing costs - thereby, increasing the impact of each ratepayer dollar spent on energy efficiency.

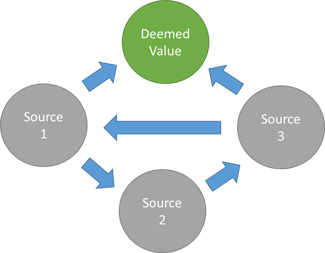

Often, TRMs include “deemed values” which represent the consensus of stakeholders about the value to use.[2] As described in the Illinois TRM, the most accurate estimate of savings is based on the actual values for the individual measure, followed by the use of deemed values input into a savings algorithm (e.g., hours of use), followed by a single deemed savings value (e.g., 500 kWh).

Despite the many benefits for using deemed values, program implementers and evaluators should also be aware of their limitations to make sure they are used correctly and with the appropriate level of skepticism. Here are a few examples where deemed values can get you into trouble.

Not Accounting for Averages

Deemed values are typically based on averages across the region or among a wide range of customers rather than for a particular project. This means that no single project (or very few, at least) will have the savings or input values that match the average exactly. In practice, and despite our knowing better, there is a very compelling appeal to compare the details with a specific project with (average) deemed values and determining that the project is atypical and should be treated differently. For example, consider a project completed in a high school. In Illinois, the deemed annual operating hours value for a high school is 3,038 hours. However, this particular high school also offers a day care as well as summer and weekend adult education classes, which extend its annual operation beyond the times when high schools are “usually” open.

The implementer or evaluator may decide that, because of this, the operating hours of a college (3,395) better fit the use of this building and use that input when calculating savings. This is wrong. We must operate under the assumption that the deemed value was based on a representative sample of that building type and that there is some distribution around the mean – some schools have much lower operating hours and some have much higher operating hours.[3] By shifting this school into a different category, we would be introducing bias into both the high school and college categories and reducing the accuracy of our results.

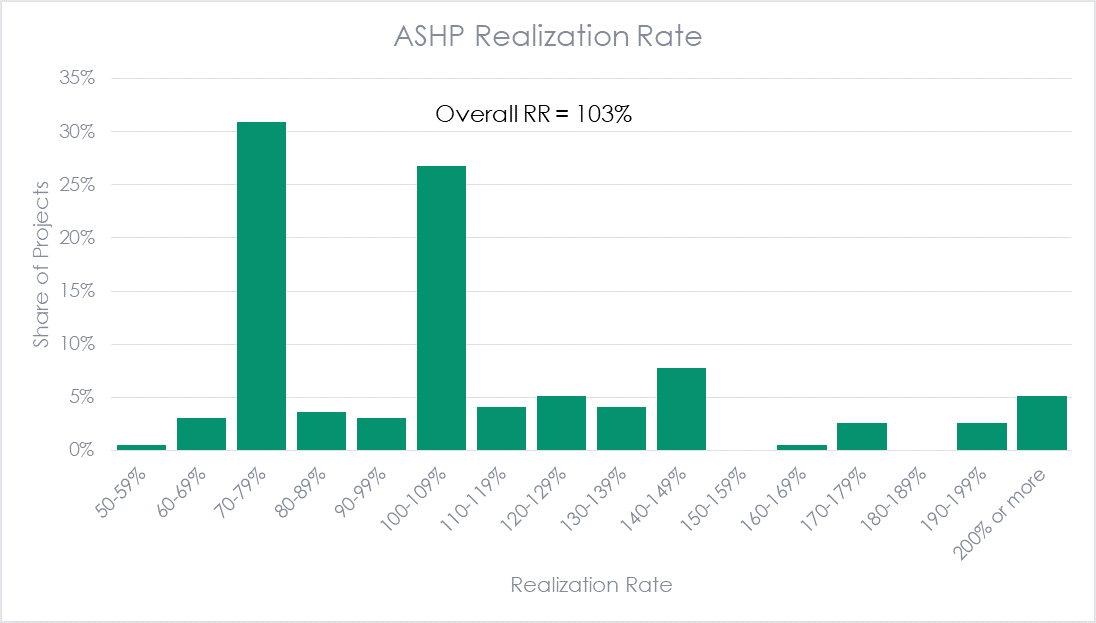

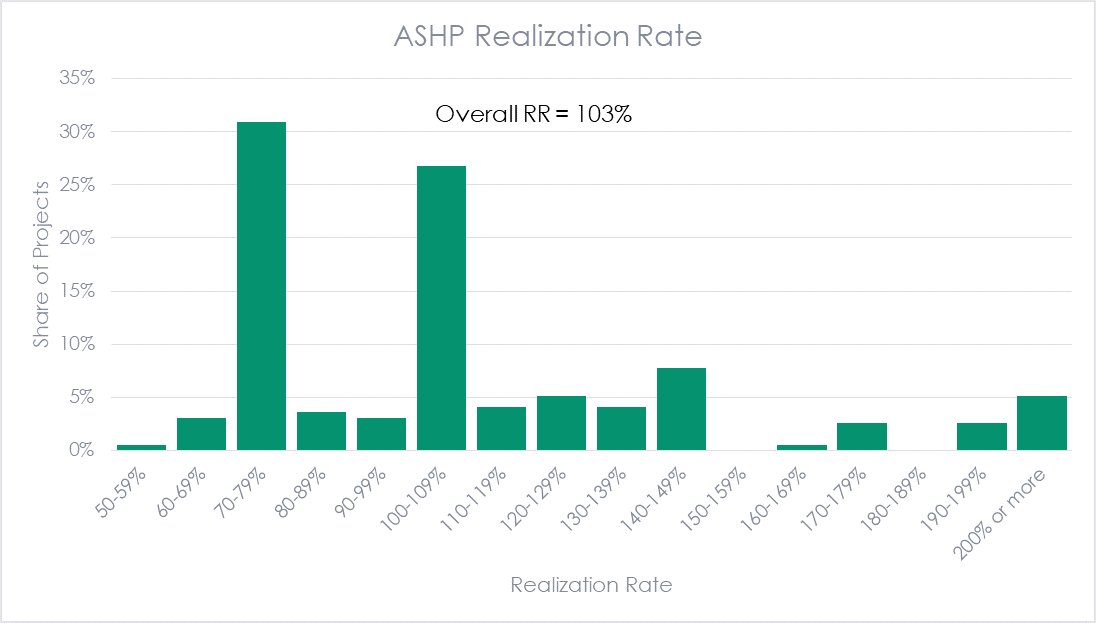

In some cases, programs use a deemed savings value for all projects based on the mean input values. The same concept applies here: the savings are based on an average of all projects and any given project will likely not match the deemed savings exactly. As an example, below is the distribution of realization rates for a recent air source heat pump evaluation Michaels conducted. The overall realization rate is 103% in this example (i.e., essentially the deemed value) but the realization rates of individual projects are widely distributed (and not normally).

Relying on Low Rigor or Mysterious Assumptions

Another way deemed values can be problematic is if they are not based on rigorous research. Before diving into this, I want to state that I use hundreds of assumptions every day of varying rigor. Evaluators and implementers do not have perfect information about everything and have to rely on proxies, expert judgement, or imperfect studies for many inputs, hoping that they can revisit and update the weak points in future research. The issue becomes precarious when those placeholder assumptions are treated as incontrovertible. There are many examples of TRMs that provide a deemed value to multiple decimal places that are ultimately sourced to discussion with a product representative or similar qualitative estimate.

Effective useful life (EUL) estimates are great examples. Michaels is currently working on a measure life study and we have quickly found that sourcing for EUL values is a winding, often circular, path and usually not based on much data. Despite this, the persistence of savings based on these assumptions is becoming a key component of program goals.

On a whim, I decided to jump into the rabbit hole and follow the EUL sourcing to its conclusion (or at least as far as I could on a Saturday). I randomly chose efficient compressed air systems as the measure of interest. Let’s start with the latest version (v8) of the New York State TRM. In the NY TRM, the EUL of an air compressor (and most of its components) is 13 years and this is based on “a review of TRM assumptions from Ohio (August 2010), Massachusetts (October 2015), Illinois (February 2017) and Vermont (March 2015). Estimates range from 10 to 15 years.”[4]

Diving in to these sourced TRMs, I found that the Ohio TRM value is based on a measure life study from Wisconsin which references a Massachusetts measure life study from 2005. This study is a literature review of five Massachusetts utilities (since largely consolidated) and ten national utilities/states. The sources behind the five Massachusetts utilities were not available, so I dug into the other utilities’ values. Many of the reviewed utilities did not provide sources. The ones that did were based on less than rigorous data. For example, the Texas estimate of 10 years was because the state uses a 10 year EUL for all measures. PSE&G deemed the EUL to be 15 years because all lighting measures were given a 10 year EUL and all non-lighting measures were given an EUL of 15 years.

The California estimate is based on a 2001 study of SDG&E’s 1994 and 1995 installations. That study looked at one compressed air system in the sample frame, found it was still in operation after six years and therefore the ex ante EUL estimate of 20 years (based on “engineer judgement”) was not changed. I was unable to dig up the Efficiency Maine TRM from that time, but did find that the current TRM (v2021) has an EUL of 15 years, which is based on the 2005 MA measure life study, of which Efficiency Maine is one of the sources. At the time, the Illinois deemed lifetime was 10 years but was not sourced.[5] The Massachusetts TRM assumption of 15 years was not sourced, but was likely from the 2005 measure life study. The Efficiency Vermont value of 10 years was also not sourced. It appears that the EUL for this measure is largely based on unsourced estimates, broad measure life edicts not based on the actual equipment, and a study involving one compressed air system.

By this time, I had used up several notebooks keeping track of all of this, had moved over to drawing on a mirror like Matt Damon in Good Will Hunting, and needed to stop while I was still sane. However, I hope this exercise shows that deemed values, especially EULs, should be used with caution and we should not assume that a value is correct because it has been used elsewhere.

[1] Utilities offer less researched measures or those with more savings variation through “Custom” programs, which require a more detailed analysis from the program (and then the evaluator) to estimate savings.

[2] Ultimately, the accuracy of a savings estimate depends on the quality of input assumptions and the algorithm. This discussion is only focusing on the input assumptions – I’ll leave the algorithms to the engineers.

[3] This is a large leap of faith and requires trust in the rigor of the research on which the deemed inputs are based. Having a granular table of input options is helpful but only if the options are well defined and well researched.

[4] New York Standard Approach for Estimating Energy Savings from Energy Efficiency Programs – Residential, Multi-family, and Commercial/Industrial Measures, Version 8. Appendix P.

[5] The Illinois deemed EUL value has since been changed to 13 years based on a Department of Energy Technical Support Document.